Description

Transform your AI development with OnHover’s latest integrated development environment, combining Langflow, Ollama, and VS Code in a secure, containerized solution. This stack enables developers to build sophisticated AI agents and applications while maintaining complete data privacy.

Key features include:

- Run powerful open-source LLMs locally using Ollama, including Llama 3.2, Gemma 2, and specialized models like StarCoder 3

- Build complex AI workflows visually through Langflow’s intuitive interface

- Develop and test your applications in a browser-based VS Code environment

- Everything runs in a pre-configured container – no setup required

Perfect for teams building private AI solutions, RAG applications, code assistants, or multi-agent systems. Simply deploy the stack, choose your GPU configuration, and start developing with immediate access to both VS Code and Langflow interfaces. Your data stays with

Working with the Latest Language Models

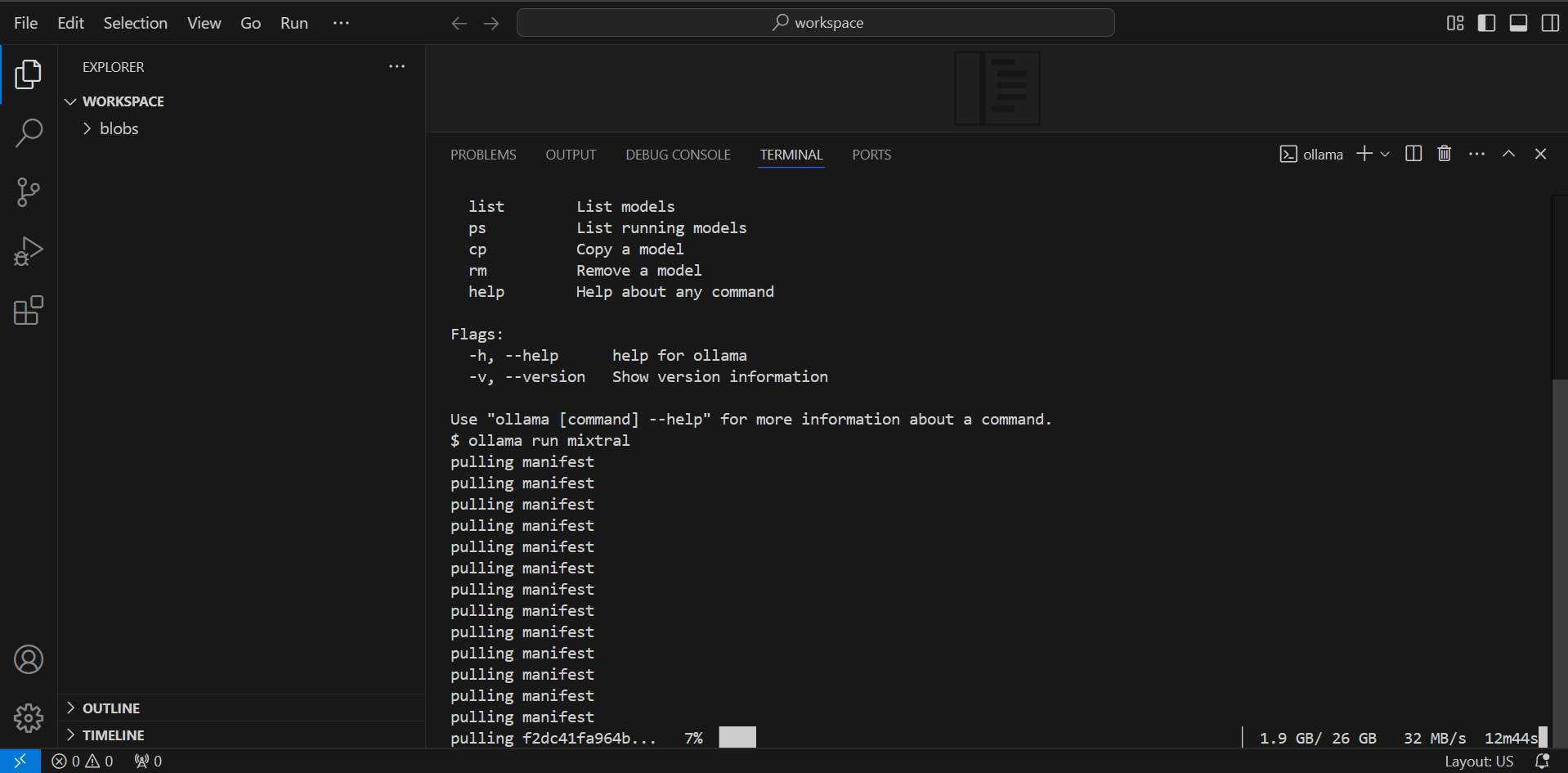

One of the most powerful aspects of this stack is the ability to run cutting-edge language models locally through Ollama. As of 2025, you have access to several powerful models:

- # Latest General Purpose Models

- ollama pull llama3 # Meta’s latest Llama 3.2 model

- ollama pull gemma2 # Google’s advanced Gemma model

- ollama pull mistral # Mistral’s performant model

- # Specialized Models

- ollama pull llama3-vision # For image understanding tasks

- ollama pull starcoder3 # Latest coding assistant

- ollama pull deepseek-r1 # Research-focused model

Each model has its specific strengths:

- Llama 3.2: Exceptional at general tasks with strong reasoning capabilities

- Gemma 2: Optimized for efficiency and accuracy

- StarCoder 3: Specialized for software development

- DeepSeek-R1: Advanced research and academic applications

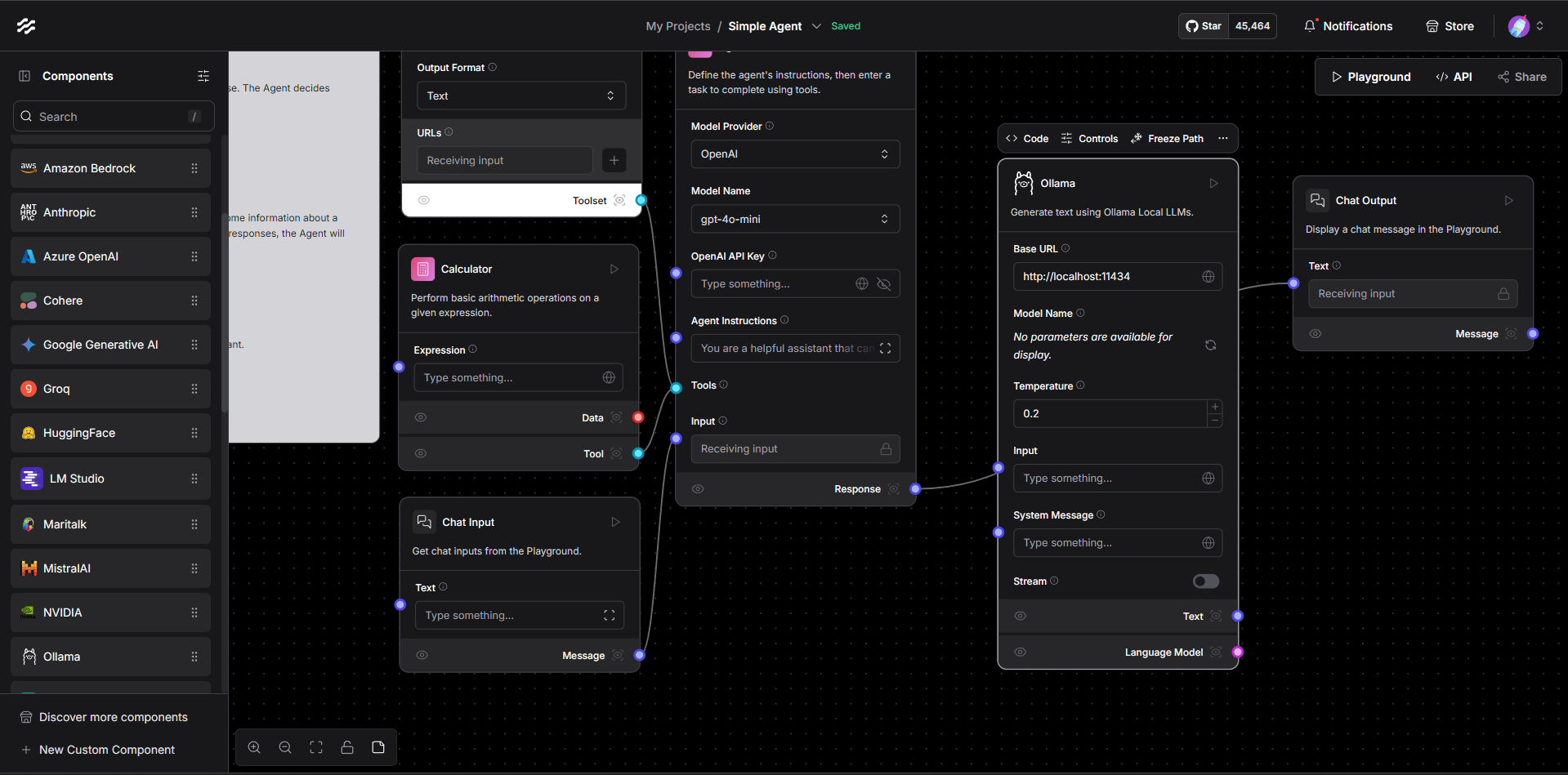

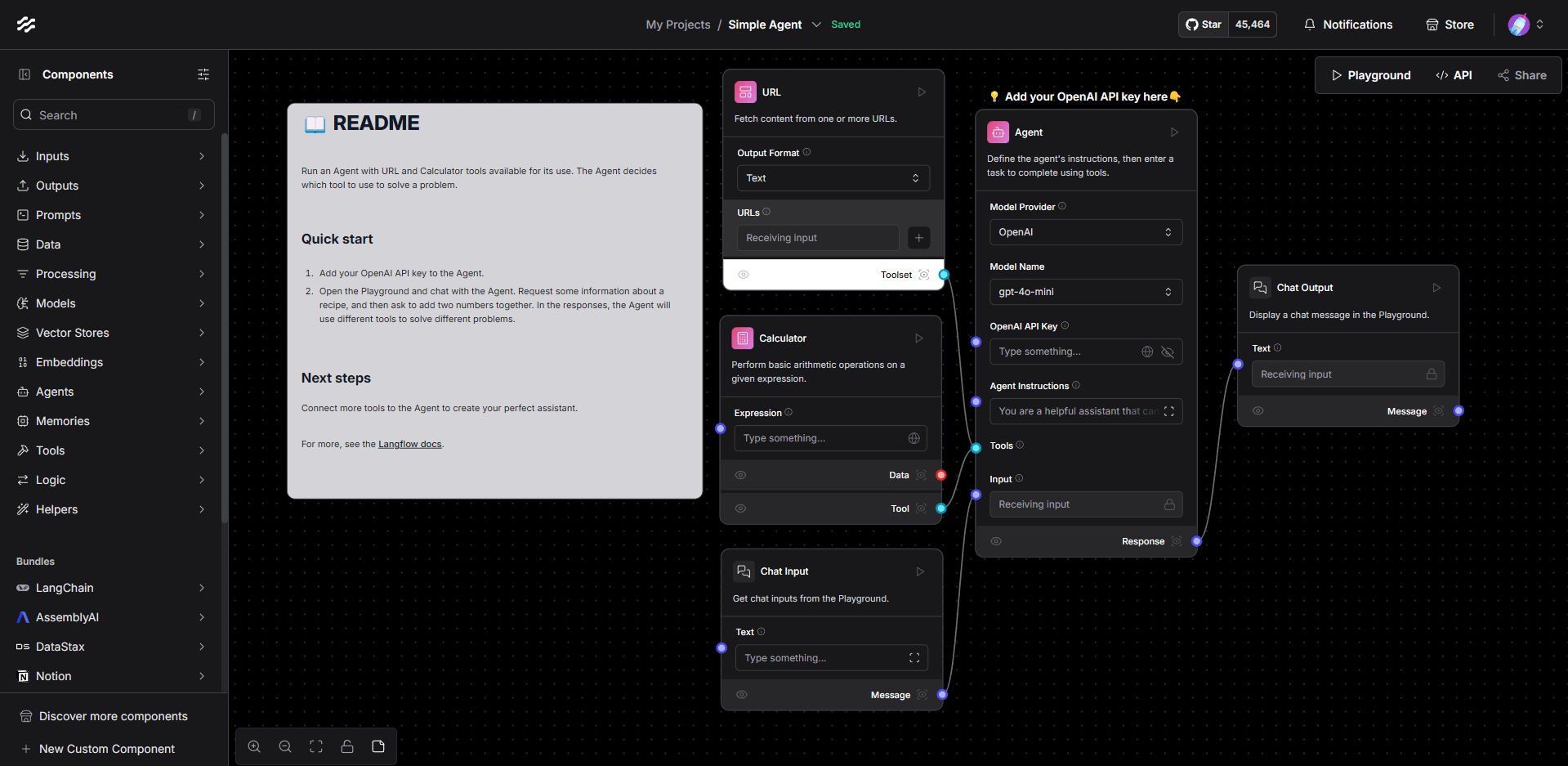

Building Modern AI Agents in Langflow

Langflow has evolved significantly, now offering advanced components for building sophisticated AI applications. Let’s explore how to build a modern AI agent:

- Understanding Component Categories:

- LLM Integrations: Including the Ollama component for local models

- Memory Systems: For context retention and conversation history

- Tool Integration: For external API and data source connections

- RAG Components: For building knowledge-enhanced applications

- Multi-Agent Orchestration: For complex, collaborative AI systems

- Creating a Basic Agent: Let’s build a code assistant using StarCoder 3:

-

- # Example workflow components:

- 1. Ollama LLM (StarCoder 3)

- 2. Conversation Memory Buffer

- 3. Python REPL Tool

- 4. Code Review Chain

The modern Langflow interface makes this process intuitive through its drag-and-drop system. Each component can be configured with advanced parameters to fine-tune your agent’s behavior.

Advanced RAG Implementation

One of the most powerful features in the 2025 stack is the ability to build sophisticated RAG (Retrieval Augmented Generation) systems. Here’s how to set it up:

- Document Processing:

- Use the Document Loader component for various file formats

- Configure the Text Splitter for optimal chunk sizes

- Implement the latest embedding models through Ollama

- Knowledge Base Creation:

- Set up vector storage for efficient retrieval

- Configure similarity search parameters

- Implement hybrid search strategies

- Agent Integration:

- Connect the RAG pipeline to your Ollama model

- Implement conversation memory for context retention

- Add specialized tools for document manipulation

Best Practices for Development

- Model Selection:

- Choose models based on your GPU capabilities

- Consider using Llama 3.2 for general applications

- Use specialized models for specific tasks (coding, research)

- Resource Management:

- Monitor GPU memory usage

- Implement efficient model loading/unloading

- Use appropriate model quantization when needed

- Agent Development:

- Start with simple workflows and iterate

- Test thoroughly with various input types

- Document your component configurations

Troubleshooting Modern Deployments

Common scenarios you might encounter:

- Model Loading:

# If a model seems stuck during loading: - ollama list # Check current models

- ollama rm modelname # Remove if necessary

- ollama pull modelname # Fresh download

- Performance Optimization:

- Adjust batch sizes for your GPU

- Configure model parameters for efficiency

- Monitor memory usage patterns

Looking Forward

The combination of Langflow’s visual development environment, Ollama’s powerful local models, and VS Code’s development capabilities provides a robust platform for building next-generation AI applications. Whether you’re developing:

- Advanced code generation systems

- Multi-modal AI applications

- Complex RAG-based knowledge systems

- Multi-agent collaborative systems

This stack gives you the tools to build sophisticated solutions while maintaining complete control over your data and development process.