Description

Core Features and Benefits:

-

Complete Control Over Your AI:

- Run various open-source LLMs privately on your dedicated GPU instance

- Full data sovereignty with no external API dependencies

- Customizable model configurations and parameters

- Support for multiple popular models including Llama, Gemma, and Mistral

-

User-Friendly Interface:

- Intuitive chat interface through OpenWebUI

- Easy model management and switching

- Built-in RAG (Retrieval-Augmented Generation) capabilities

- No command-line expertise required

- Multi-chat session support

-

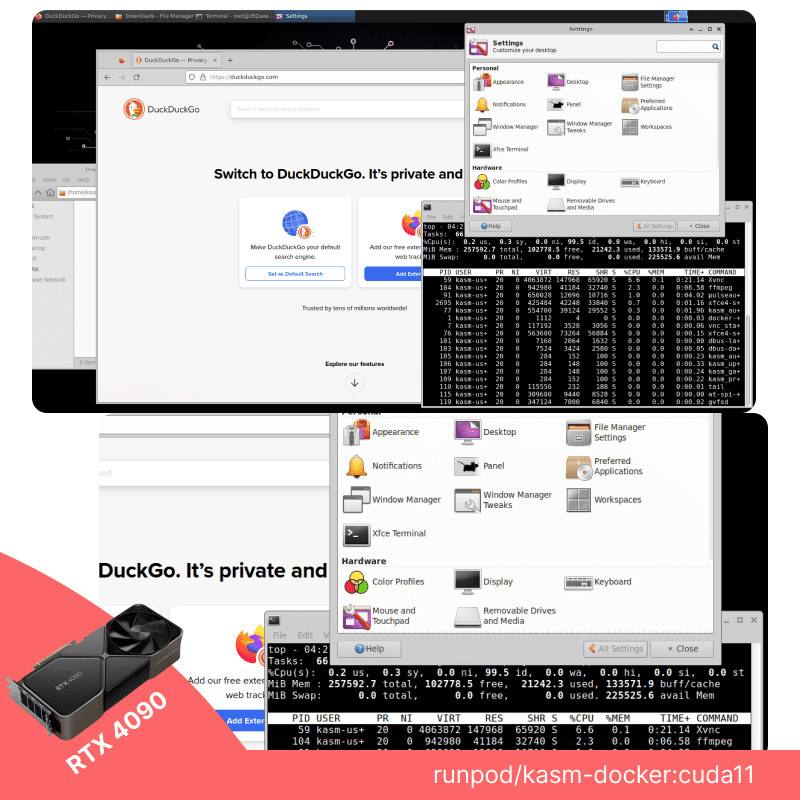

Flexible GPU Options: We offer a range of GPU configurations to match your specific needs:

- Entry-level: RTX A4000 (16GB) for basic LLM operations

- Mid-range: RTX A5000 (24GB) / RTX 4090 (24GB) for improved performance

- High-performance: RTX A6000 (48GB) for handling larger models

- Enterprise-grade: H100 configurations (up to 4x 80GB) for maximum performance and multi-model operations

-

Perfect For:

- AI researchers requiring private model experimentation

- Developers building AI-powered applications

- Organizations needing secure, offline AI capabilities

- Teams requiring collaborative AI workspace

- Projects requiring fine-tuned or custom models

-

Technical Advantages:

- Built-in inference engine for RAG

- Support for model fine-tuning

- Extensible plugin system

- Multi-model concurrent running

- Easy model downloading and management

Our combination of OpenWebUI and Ollama, powered by enterprise-grade GPUs, provides you with a complete solution for running sophisticated AI models in a private, controlled environment. Whether you’re running basic chatbots or complex AI applications, our platform provides the flexibility, power, and ease of use you need.

How to Use?

-

Initial Setup Process:

- After deploying your instance, an automated setup process begins that downloads and configures both OpenWebUI and Ollama

- The initial setup typically takes around 8 minutes to complete

- Setup time may vary depending on server internet speed and the size of applications/models being deployed

- You can monitor progress in the “Instance Status” section by refreshing the page

- Note: Since we maintain user privacy and don’t access your instance directly, the setup time provided is an approximate estimate

-

Accessing OpenWebUI:

- Once setup is complete, click the “Launch OpenWebUI” button to open the interface in a new tab

- Your instance comes pre-loaded with Qwen 2.5 (1.5B parameters), a lightweight but capable model to get you started immediately

-

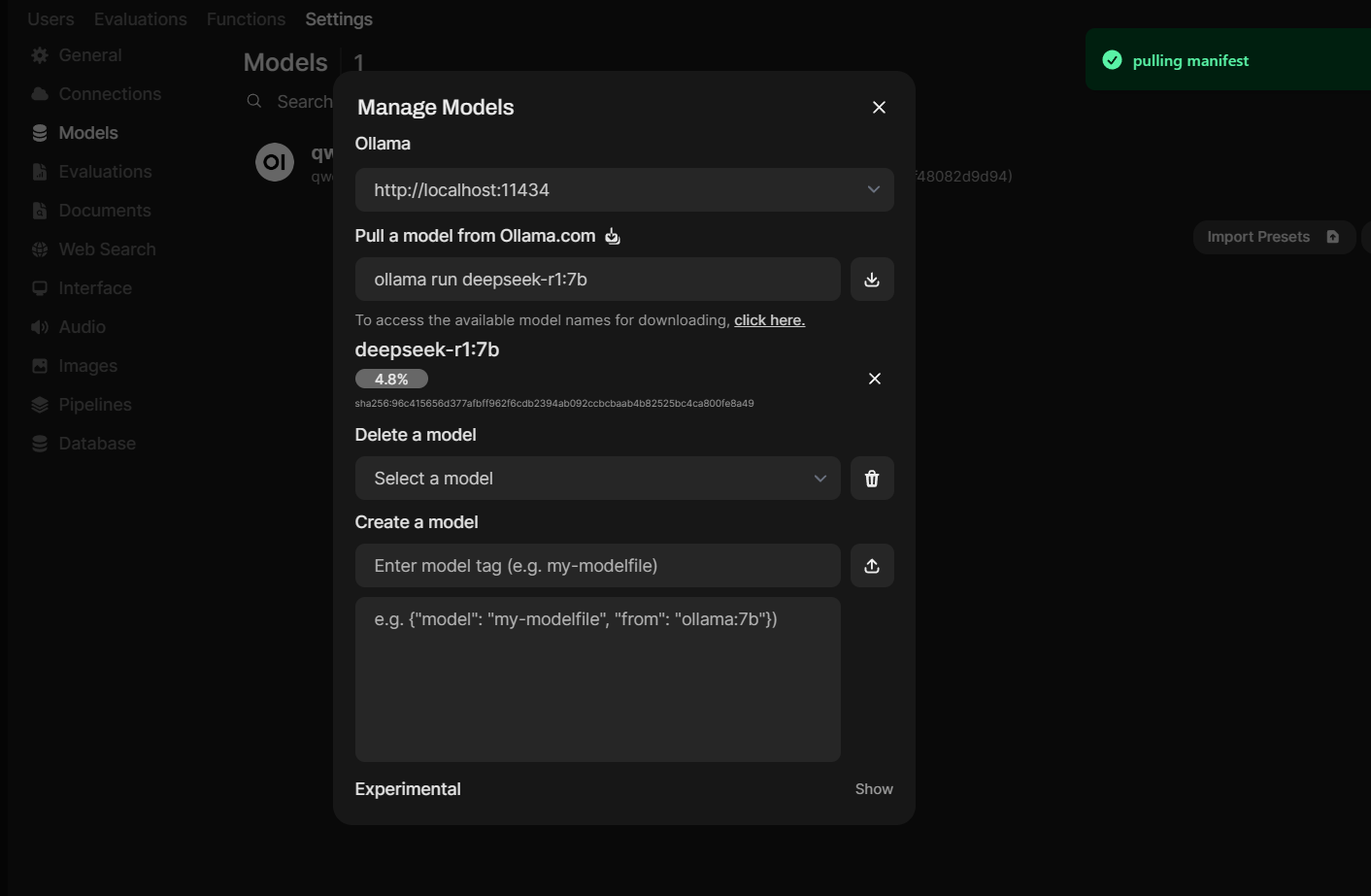

Adding New Models: To add additional models to your instance:

- Navigate to Settings > Admin Settings > Models tab

- In the “Pull a model from Ollama.com” section, enter the model name you wish to download

- Click the download button to begin the model installation

- A progress indicator will show the download and setup status

- Once complete, return to the home screen

- Select your newly downloaded model from the model selector dropdown at the top

-

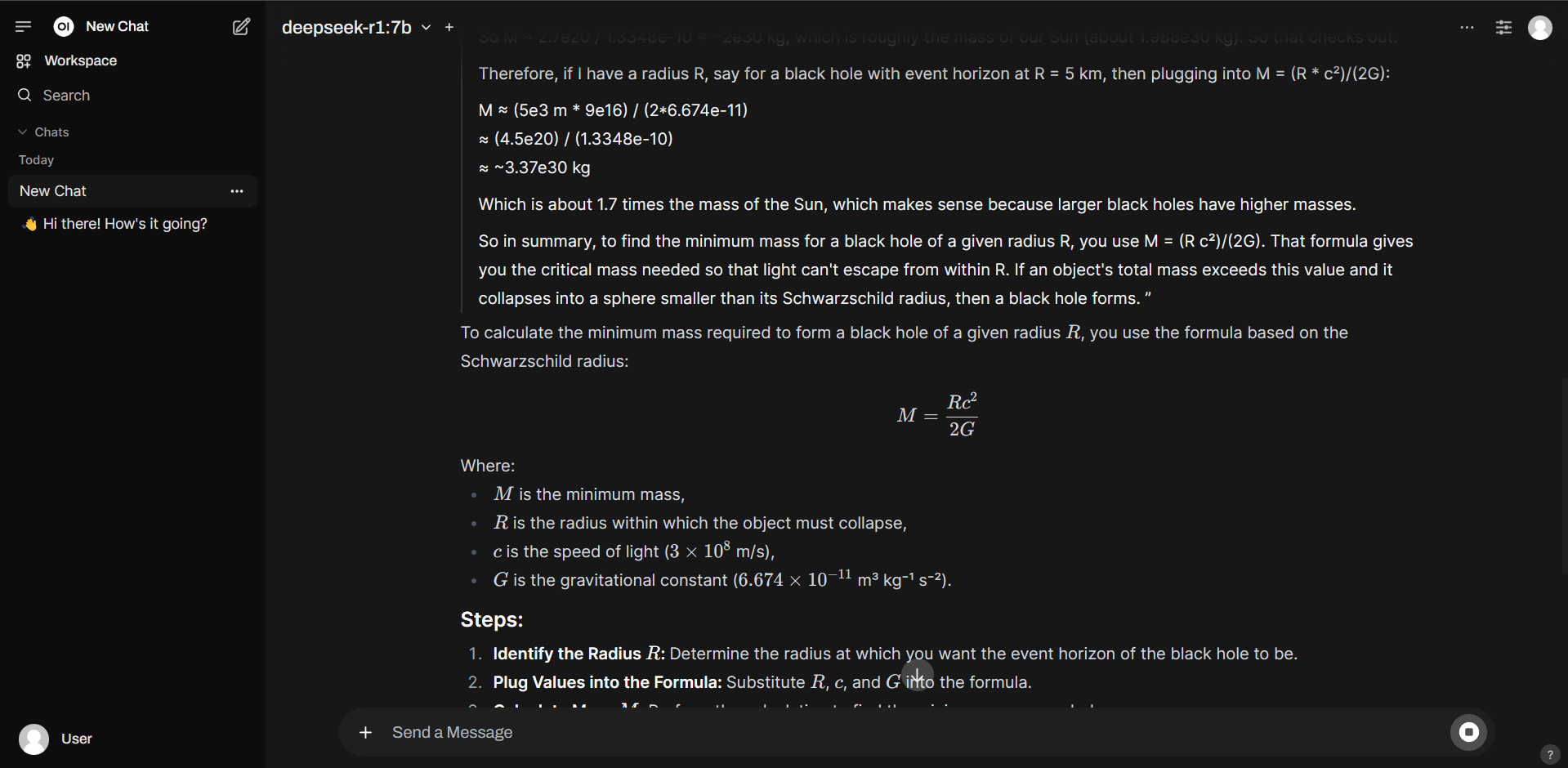

Using Models:

- Start a “New Chat” to begin interacting with your selected model

- You can switch between different models using the dropdown menu at the top of the chat interface

- Each model maintains separate chat histories

- You can create multiple chat sessions with different models running simultaneously

Tips:

- Start with the pre-installed Qwen 2.5 model while downloading larger models in the background

- Check the Ollama model library for compatible models and their requirements

- Consider your GPU specifications when choosing models to download

- Keep track of your storage usage when downloading multiple models

- You can delete unused models through the Models tab to free up space

Remember: Your instance runs completely privately – all data and interactions remain within your dedicated environment. The system is designed to be user-friendly while providing the flexibility to run various AI models according to your needs.

References:

- OpenWebUI GitHub Repository

- OpenWebUI Documentation

- Ollama Overview

- Practical Guide to Ollama and OpenWebUI

- Ollama Implementation Guide